Ethel was first introduced in Spring semester 2023 as an AI-supported assistance platform for teaching and learning. It has since grown into a service that supports more than 20 courses with a combined enrollment of over 4000 learners. For upcoming semesters, Ethel Commons would be using this version of Ethel.

Chatbots

The most widely used functionality in the current Ethel system is course-specific chatbots. Instructors upload course materials such as lecture notes, exercise sheets, model solutions, and lecture slides. These documents are indexed and used as references in chat sessions.

During a chat session, students can ask conceptual or procedural questions. The system responds with explanations that are explicitly tied to relevant excerpts from the uploaded resources. Instructors can add additional prompts to influence the style of the conversation, for example asking the system to respond in a more Socratic manner.

Exercise Feedback

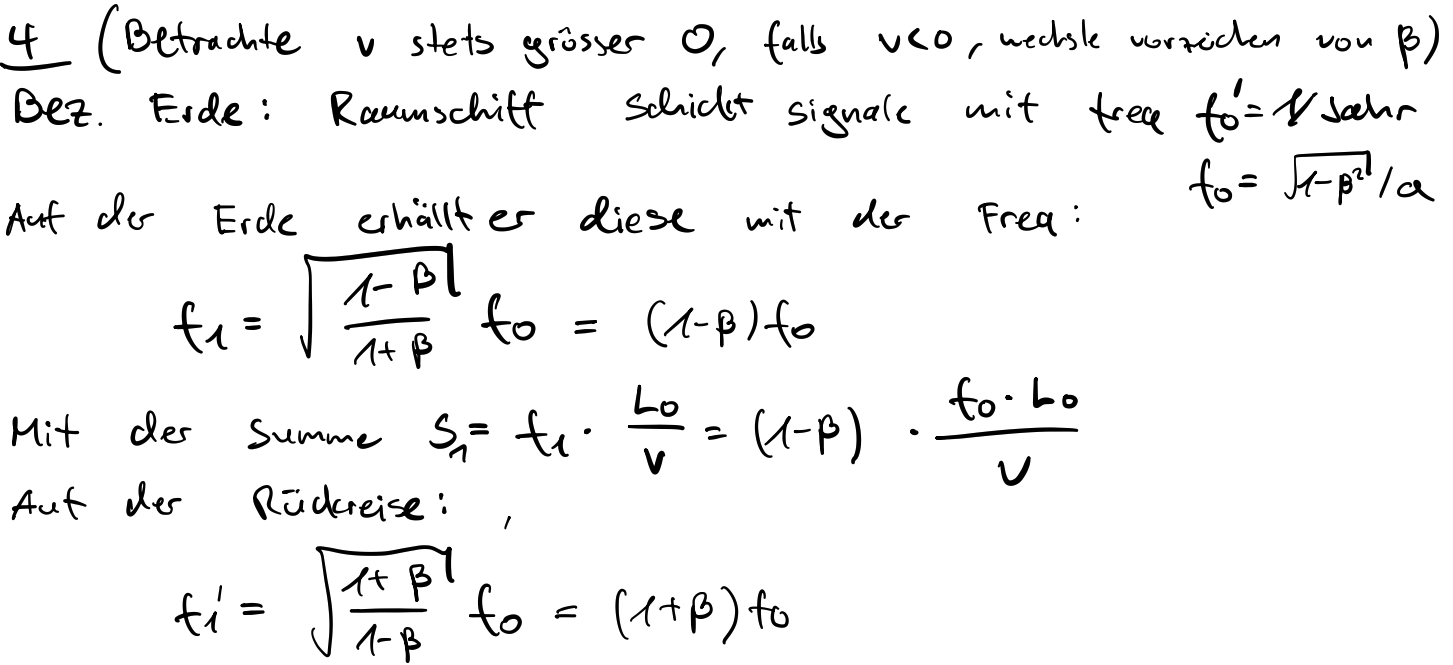

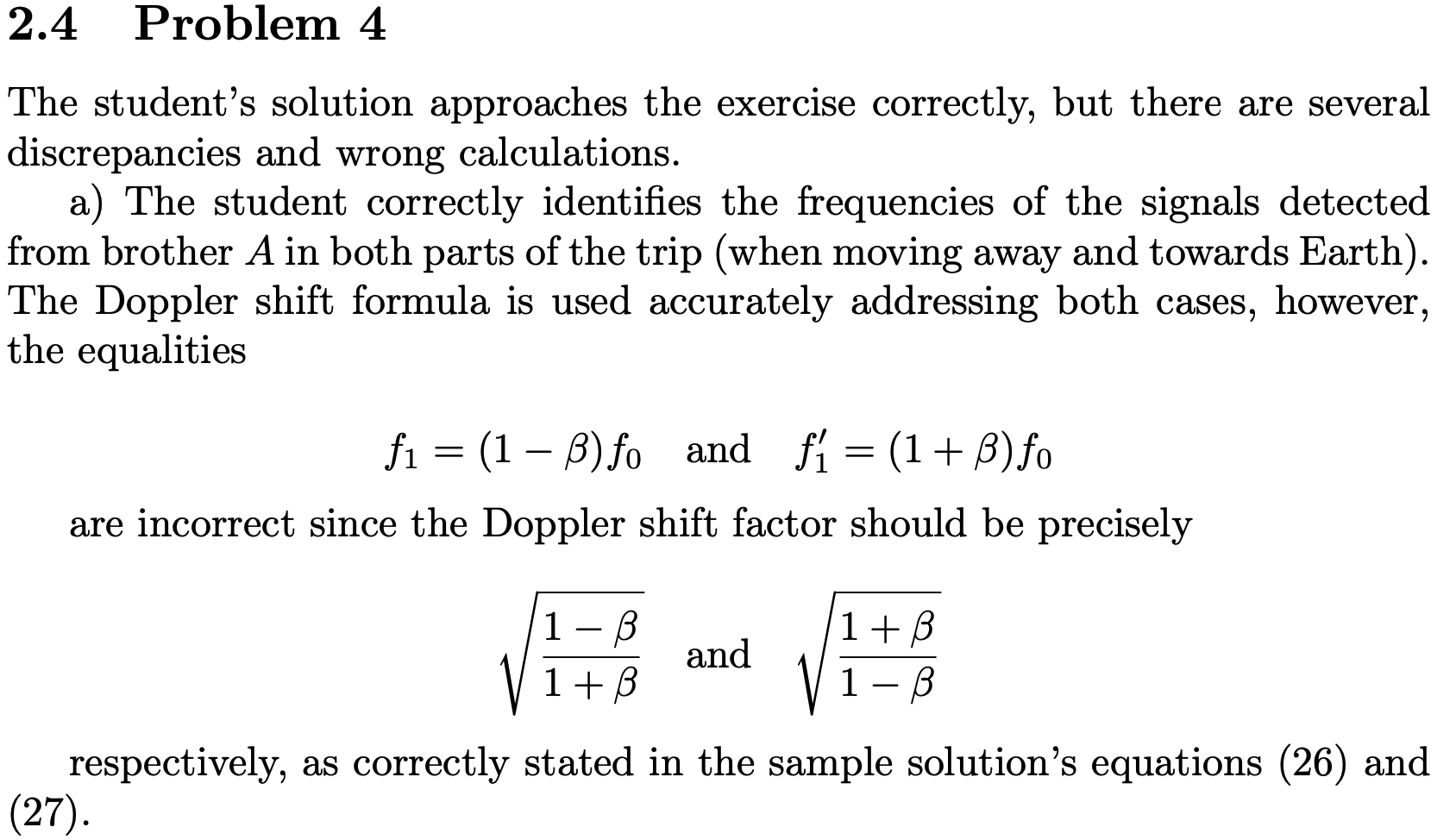

Several courses use Ethel to provide formative feedback on written exercises. Instructors set up digital “drop boxes” by uploading exercise sheets and, optionally, sample solutions and detailed feedback rubrics.

Students solve the problems on paper, then photograph or scan their handwritten work and upload the images to Ethel. Using multimodal large language models, Ethel interprets the handwritten derivations, sketches, and diagrams and generates individualized feedback on each student's solution.

Exam Grading Assistance

Ethel has been used in exploratory studies to assist with grading handwritten, high-stakes examinations in large-enrollment courses in thermodynamics, chemistry, and calculus. These studies focus on open-ended problems where students show their work on paper.

After the exam, student booklets are scanned and the relevant answer regions are linked to a detailed rubric specifying how points are assigned. Ethel then proposes scores for each student–item combination, together with measures that indicate whether human review is recommended.

Test-theoretical models are used to flag student–item rubric combinations that are “uncertain” and should be routed to human graders. Several filter criteria are used:

- Correctness: The AI assigns partial scores on a continuous scale. A post-hoc threshold on this score determines which items are treated as sufficiently correct for automatic acceptance.

- Uncertainty: For each student–item, a risk measure compares the item response theory (IRT) predicted solving probability with the AI-assigned partial score. Large discrepancies trigger manual review.

- Correct-only: In the strictest setting, only items that are graded fully correct and low-risk by the AI are accepted automatically; all others are passed to human graders.

Teaching-assistant (TA) grading serves as the ground truth. By tightening the filtering thresholds, Ethel can increase agreement between AI-assigned and TA-assigned scores, at the cost of a larger fraction of items that still require manual grading. This allows instructors to tune how much workload they want to offload to the AI while maintaining human-in-the-loop safety.

Proofs of Concept

Beyond chatbots, exercise feedback, and exam grading assistance, several proofs of concept explore how Ethel can support everyday teaching workflows:

- Interactive on-demand practice problems: AI-generated practice tasks based on course materials, allowing students to initiate additional practice.

- QuickPoll: A tool for collecting and summarizing open-ended student responses during lecture.

- Accessibility improvements: Use of multimodal LLMs to improve accessibility of lecture materials, for example by creating detailed descriptions of LaTeX-generated figures and equations.